Although the first “talkie” was 1927’s The Jazz Singer, more experimental sync-sound efforts date back to the 1900 Paris Exhibition. The arrival of sound films was heralded as a huge step in the development of cinema technology and in new possibilities it opened as an art form. But early efforts were primitive and didn’t allow for the captured audio to be manipulated after the initial recording had been made. It was what you heard was what you got.

Not surprisingly sound recording has improved quite a bit since the early 1930’s. Today’s recordists benefit from highly accurate and sensitive microphones and digital recording technologies that couldn’t even be imagined in the early days. But audio artists and technologists didn’t stop at the sounds they recorded – that was only part of the finished product – they took their cues from their ears to develop a way to represent the sounds that happened in a filmed scene.

Stop reading for a moment, close your eyes and simply listen to the sounds in your environment for a few seconds. What did you hear? Perhaps there was a fan or a furnace, traffic, dogs barking, a siren, people talking, construction, etc. Where did you hear these sounds? Maybe you heard the people talking slightly behind and the left and the fan out of your right ear; maybe the siren moved from right-to-left, as it zoomed by. Sound designers or sound mixers, as they are known in the film industry, have evolved the art of sound mixing to such a fine degree that some films could not have been as effective without the ability to invent novel sound designs. For curious readers, we highly recommend you rent Francis Ford Coppola’s The Conversation, one of the most compelling sound films made in the last 40 years. And, if you can watch it with a surround sound system, the more better.

So let’s create a hypothetical mix using a few words and our imaginations. Picture this: a man stands at a bus stop on a deserted, urban street. He has a brief case in one hand and a bag of corn chips in the other. An old car with a failing muffler drives by him from right-to-left. He puts the briefcase down and checks his watch. He opens the bag of chips, pulls one out and proceeds to chomp down on it. He hears a tire screech in the distance and startled, he accidentally kicks over the briefcase and it goes down with a thud. Okay, that’s pretty good.

- So what do we have?

- Car with bad muffler drives right-to-left

- Sets briefcase down

- Coat moves a bit as he checks his watch

- Opens bag and reaches in for a chip

- Eats chip

- Tire screech

- Kicks over briefcase

Foley is merely one aspect of a sound mix. Sound effects may be created in a studio or under live circumstances, such as the sound of an explosion or a car crash. When actors speak on camera, their dialogue is recorded and sometimes with multiple microphones, enabling the sound mixer to choose the more natural or appropriate sound quality to use in the final mix. Music is also a part of a mix, both pre-recorded and live music that’s being produced as the video or film is being shot, like a performance by a live band.

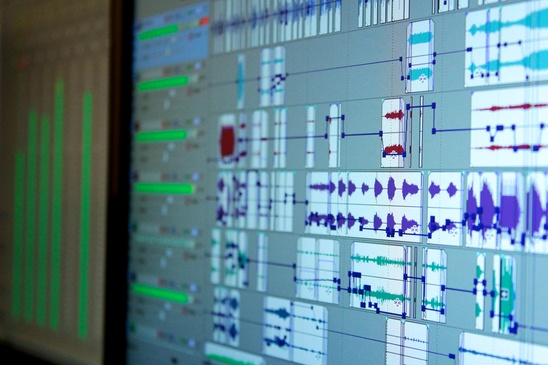

When all of these various sounds are gathered together, the sound mixer uses a sound-mixing program to assemble the various clips. Popular sound mixing software includes Avid Pro Tools, Apple’s Logic Pro, Abelton Live, and Adobe Audition. These programs are also known as DAWs or Digital Audio Workstations because of their ability to create audio as well as mix it through the use of MIDI (Musical Instrument Digital Interface) attached devices and instruments, like keyboards, drums and guitars.

When the sound mixer sits down to go to work, she creates multiple tracks (hence the term multitrack) upon which to organize all of the sound clips or files. Often each actor receives their own track and sometimes several. Sound effects, foley, music, narration and so forth are placed on various tracks in time, depending upon when those sounds are to be heard. The term used to describe the time is timeline and it simply starts at the beginning of the video and ends at the same time the picture ends. Sync sounds, such as actors speaking and any sound effects recorded during shooting must remain in sync, while other elements are laid into their respective tracks manually.

The purpose of a sound mix is to ensure that the audio enhances, rather than detracts from the quality of the overall program. A good mix can save a poorly produced or visually unappealing project.